NEW New feature: Verify & block fake emails

We improve your ad performance by blocking click fraud and fake emails

Click fraud is costing advertisers billions in loses. Learn more here.

Click fraud is costing advertisers billions in loses. Learn more here.

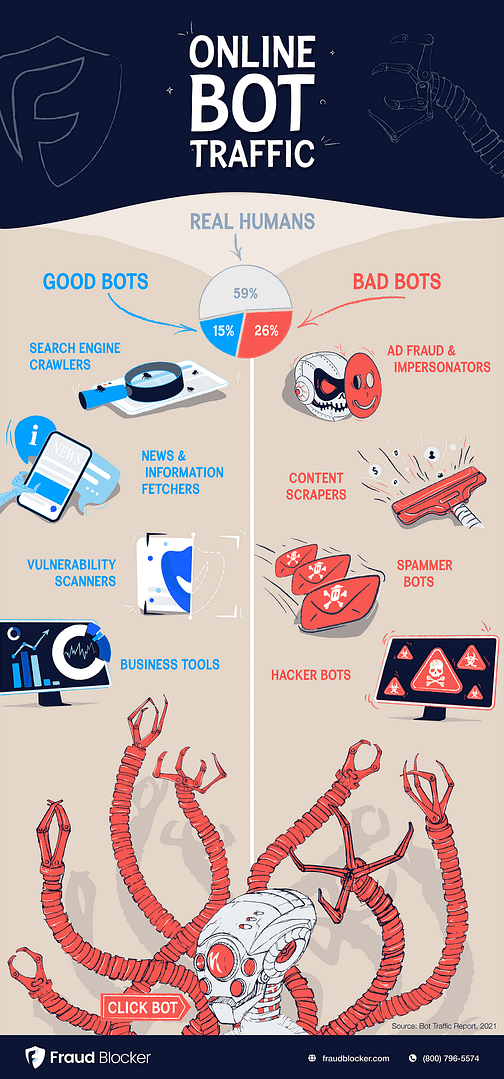

When most people hear the term “bot traffic,” they think of cybercrime or fake social media engagements from view bots. These are both common uses for bot traffic, but bots themselves are not inherently bad. Depending on the purpose of the bots, they can be beneficial for your site and your user experience, or they can be completely neutral. Bots are just programmed scripts that do the job they were made to do.

Bot traffic is a term used to describe non-human traffic to websites and apps. Much of this activity is automated tasks such as collecting data, monitoring services and ensuring that most of the websites and computer programs we use work smoothly.

However there is also a large segment of non-human activity online which is made up of malicious bots. They may or may not be part of a botnet, and can be used for a wide range of fraudulent and vindictive activities, from DDoS attacks, to repetitive tasks such as spam and advertising click fraud.

The volume of non-human internet traffic has been rising year on year, and a 2023 report by Imperva found that bots make up nearly half (49.6%) of all internet traffic. A rise of 2% from the previous year.

Of this, bots used for fraudulent and malicious behavior make up nearly a third of total internet traffic, at 32%.

Chart source: Imperva, Bad Bot Report, 2023

Chart source: Imperva, Bad Bot Report, 2023

Increasingly, businesses are waking up to the importance of knowing how to prevent malicious bot activity on their websites. Whether they are storing sensitive information, paying for ads or providing internet based services.

But how do these bots operate, and what do you need to know before you start looking to block bots on your website?

Most of the activity we do online depends on the data collected by internet bots. These bots are primarily used to legally gather information to improve the user experience. For example, the bots you DO want on your site are the search engine crawlers.

Crawlers such as the Google bots catalog and index your web pages so Google can serve up your content in the search results. Without these crawlers, the internet would be a vastly different place than it is today, and your website wouldn’t show up in the search engines.

Other services such as marketing tools and data analysis tools also use these website crawlers to collect and collate information.

Website monitoring bots are another important part of the internet, which ensure that our websites work as they should. These bots work to monitor loading times, downtimes, and other metrics that provide a health assessment of the site and tell relevant parties where there are bottlenecks and other issues that can be addressed.

There are also aggregation bots that gather information from multiple locations to collate in one place. Think of flight or hotel booking platforms, or insurance comparison sites which rely on these good bots to find the best deals for us from across a huge range of websites. Something that could take a human user many hours of manual searching is made simple.

Scraping bots can be good or bad, depending on the intended purpose of the scraping. “Scraping” means to collect information from a website, often contact information. When done legally, the information from scraping can be used for research and other wholesome activities, but it’s often lifted illegally.

And, increasingly, chatbots and transactional bots are used to automate processes such as customer services or complaint handling. Often these bots are used to interact with users before routing them to a real person, freeing up the human operator to handle more enquiries or carry out other tasks.

Read more: What are Bot Farms?→

Malicious bots usually exist to make someone money, whether that’s by stealing your information or taking down your site. In general, these malicious bots are used to mimic human behaviors such as commenting, clicking or engaging with social media platforms.

Spambots are one of the most common and annoying form of malicious bots. These bots can fill your comment sections with advertising messages, send endless SMS spam to your phone, or scrape contact info for phishing email attacks. If they’re able to hack your data, they may execute a ransomware attack, in which your data will be held hostage for payment.

Ad fraud and click fraud are some of the most prevalent and profitable (for the fraudster) forms of bot attack. While the two terms are often used interchangeably, there is a subtle difference.

Click fraud can relate to non-genuine clicks from both bots and humans, often designed to deplete your ad budget and disable your ad campaigns. This can be relatively small scale in nature, but can be hugely damaging on a co-ordinated scale.

Ad fraud is typically a more organized form of fraud carried out by fraudulent publishers and organized gangs, often with industrial levels of fake clicks. Here, those malicious bots are used to click ad links, watch videos and engage with content en masse. By using software to switch IP addresses or obscure their true nature, they can rapidly steal huge amounts of money from multiple advertisers, often without the ad platform noticing.

Social media bots are often used to create fake users on platforms such as Instagram, Facebook and Twitter. Fraudsters then offer fake follows, likes or other forms of engagement as a service, often for just a few dollars for thousands of likes or clicks. These social bots are usually designed to improve the perceived popularity of an account, but can also engage with paid content costing advertisers big bucks.

(See how bots on Twitter are manipulating conversations in real time.)

One of the worst bot attacks you can get, other than ransomware, is a distributed denial-of-service (DDoS) attack. A DDoS attack is when a network of bots (a botnet) floods your IP address with requests to flood the server or network. The goal is to render your website or service inoperable. DDoS attacks are carried out by competitors, disgruntled employees, governments, activists, and hackers, sometimes just for fun.

Related: What are click bots? →

When it comes to managing paid campaigns, you’ll want to know who is interacting with your content – and whether you’re getting clicks from genuine human users or a robot.

Fortunately, there are a few indicators for bot traffic. The most reliable way is for experienced engineers to monitor network requests. Short of that, an analytics tool can help detect surges of non-human visitors. If you’re watching your analytics for bot traffic indicators, you can look for:

There are various different services which can block bad and malicious bots from interacting with your website.

The problem with blocking bots is that you have to figure out how to stop bad bots while allowing good bots. Or, even more importantly, not blocking genuine human users. If all bot traffic were blocked, you wouldn’t be able to rank on Google, collect analytics, or maintain the health of your site. And if you were too strict, you run the risk of blocking potential customers.

You can weed out less advanced attackers by putting CAPTCHAs or honeypots on your sites. This often works with outdated user agents and browsers.

You can also try blocking known fraud hosting providers and proxy services. In addition, you should also be protecting your secondary access points like exposed APIs and mobile apps, sharing blocking information between systems when possible.

Advanced attackers won’t be put off by most of the measures you can take on your own to tighten your web security. The volume and sophistication of cybercrime are increasing daily. New bots are programmed to closely mimic human behavior and slip right past traditional security tools.

Click fraud and ad fraud are one of the most damaging forms of online fraud, costing advertisers an estimated $84 billion globally in 2023. And with this level of fraud rising every year, and the ad platforms seemingly unable to stem the tide, the onus is on business marketers to stop malicious bots clicking on their ads.

If you’re thinking that Google and Meta should be able to filter out fraud bots, well, unfortunately this doesn’t seem to be the case. As we discovered in our research, ‘What does Google do about click fraud‘.

Putting it simply, if you run paid campaigns on Google or Meta platforms, chances are that around 22% of your ad engagement is from bots. Meaning you’re paying each time an internet bot interacts with your paid campaigns.

Fraud Blocker’s proprietary algorithms detect malicious behaviors and automatically block bad traffic sources to keep your site and data secure.

Get started with our 7-day free trial.